[ad_1]

Chatbots are utilized by thousands and thousands of individuals world wide on daily basis, powered by NVIDIA GPU-based cloud servers. Now, these groundbreaking instruments are coming to Home windows PCs powered by NVIDIA RTX for native, quick, customized generative AI.

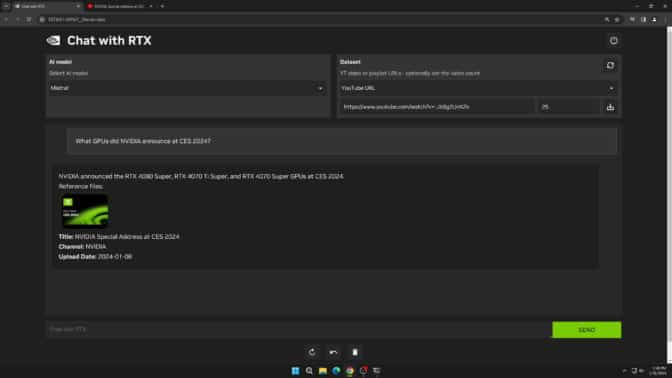

Chat with RTX, now free to obtain, is a tech demo that lets customers personalize a chatbot with their very own content material, accelerated by a neighborhood NVIDIA GeForce RTX 30 Collection GPU or greater with at the least 8GB of video random entry reminiscence, or VRAM.

Ask Me Something

Chat with RTX makes use of retrieval-augmented technology (RAG), NVIDIA TensorRT-LLM software program and NVIDIA RTX acceleration to convey generative AI capabilities to native, GeForce-powered Home windows PCs. Customers can shortly, simply join native recordsdata on a PC as a dataset to an open-source massive language mannequin like Mistral or Llama 2, enabling queries for fast, contextually related solutions.

Reasonably than looking out by notes or saved content material, customers can merely kind queries. For instance, one might ask, “What was the restaurant my associate really helpful whereas in Las Vegas?” and Chat with RTX will scan native recordsdata the consumer factors it to and supply the reply with context.

The device helps numerous file codecs, together with .txt, .pdf, .doc/.docx and .xml. Level the applying on the folder containing these recordsdata, and the device will load them into its library in simply seconds.

Customers may embrace info from YouTube movies and playlists. Including a video URL to Chat with RTX permits customers to combine this information into their chatbot for contextual queries. For instance, ask for journey suggestions based mostly on content material from favourite influencer movies, or get fast tutorials and how-tos based mostly on high academic assets.

Since Chat with RTX runs regionally on Home windows RTX PCs and workstations, the supplied outcomes are quick — and the consumer’s knowledge stays on the system. Reasonably than counting on cloud-based LLM providers, Chat with RTX lets customers course of delicate knowledge on a neighborhood PC with out the necessity to share it with a 3rd social gathering or have an web connection.

Along with a GeForce RTX 30 Collection GPU or greater with a minimal 8GB of VRAM, Chat with RTX requires Home windows 10 or 11, and the most recent NVIDIA GPU drivers.

Develop LLM-Primarily based Purposes With RTX

Chat with RTX reveals the potential of accelerating LLMs with RTX GPUs. The app is constructed from the TensorRT-LLM RAG developer reference mission, obtainable on GitHub. Builders can use the reference mission to develop and deploy their very own RAG-based purposes for RTX, accelerated by TensorRT-LLM. Be taught extra about constructing LLM-based purposes.

Enter a generative AI-powered Home windows app or plug-in to the NVIDIA Generative AI on NVIDIA RTX developer contest, operating by Friday, Feb. 23, for an opportunity to win prizes corresponding to a GeForce RTX 4090 GPU, a full, in-person convention go to NVIDIA GTC and extra.

Be taught extra about Chat with RTX.

[ad_2]